Kevin Eppacher, B.Sc.

Robotics Software Engineer specializing in perception-driven autonomy, semantic mapping, and intelligent control. Focused on developing systems that combine vision, optimization, and learning for real-world robotic applications.

Professional Work

A curated selection of my robotics research and engineering work, focused on real-time control, 3D perception, optimization, and applied AI-driven autonomy.

Table of Contents

- 1. SAGE – Semantic-Aware Guided Exploration

- 2. ROS 2 Reinforcement Learning Framework

- 3. Nonlinear MPC (CasADi/IPOPT)

- 4. PyTorch-Based MPC

- 5. AIRSKIN Sensitivity Measurement System

- 6. Monte Carlo Localization

- 7. Pan–Tilt UAV Tracking

- 8. Personal Projects

1. SAGE – Semantic-Aware Guided Exploration with Persistent Memory (Master Thesis)

A hybrid semantic exploration framework for multi-object search with persistent memory, integrating vision-language models, semantic mapping, and frontier-based navigation for intelligent exploration and reasoning.

📘 Read full description

Description

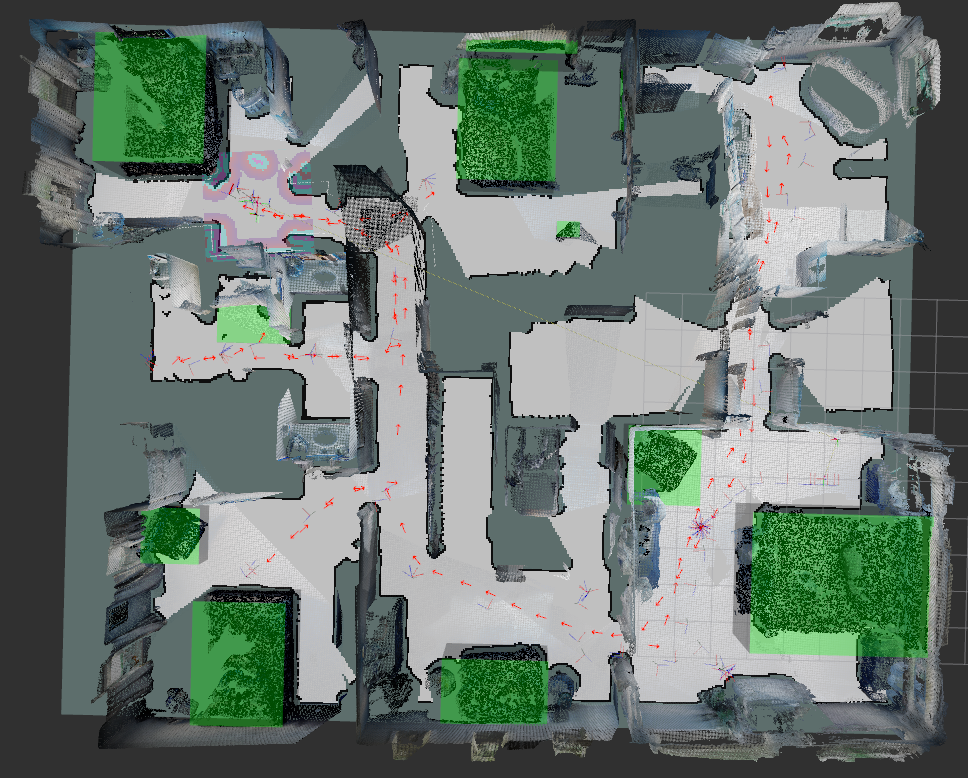

SAGE (Semantic-Aware Guided Exploration) is a framework designed for multi-object search in unknown environments using persistent 3D semantic memory.

It combines exploration, semantic understanding, and memory-based reasoning to enable robots to search and identify objects efficiently using open-vocabulary prompts.

The system integrates multiple AI and robotics components:

- OpenFusion as a 3D semantic SLAM mapper, acting as persistent memory for detected objects.

- Frontier-based exploration for geometric expansion of the map, enhanced by a Vision-Language Model (VLM) scoring system to evaluate which frontiers are most likely to contain queried objects.

- YOLO-E for real-time object detection and BLIP-2 for multimodal grounding, fused with OpenFusion’s semantic map for robust and context-aware detection.

- The combination of VLM-based reasoning and semantic memory allows the system to continuously refine its understanding of the environment and improve future searches.

Evaluation:

To validate SAGE, 3D semantic segmentation with OpenFusion is used to compare object detection and mapping accuracy against the same semantic classes.

Performance is measured using Success Rate (SR) and Success weighted by Path Length (SPL) metrics for single and multi-object search tasks.

Key Features

- Designed a full multi-modal semantic mapping pipeline combining depth, RGB, VLM reasoning, and 3D fusion.

- Implemented a persistent semantic memory layer using OpenFusion’s voxel representation.

- Developed frontier-based navigation with semantic scoring for multi-object search.

- Integrated YOLO-E, BLIP-2, and SEEM into real-time ROS 2 perception pipelines.

- Built a full evaluation pipeline using SR/SPL across multiple object classes.

The project is currently under active development, with further experiments in semantic fusion, frontier optimization, and real-world deployment in progress.

Frameworks & Tools

Links

Summary

SAGE introduces a semantic exploration architecture that fuses frontier-based exploration, 3D mapping, and vision-language models into a unified pipeline for open-vocabulary multi-object search.

Through persistent semantic memory and cross-modal fusion, it enables robots to recall, reason, and plan toward objects intelligently during long-term autonomous missions.

2. ROS 2 Reinforcement Learning Framework

A modular ROS 2 reinforcement-learning framework built for real-time robotics applications, enabling vectorized training, live introspection, and plug-in environments for reproducible DRL research.

📘 Read full description

Description

A modular ROS 2 Deep Reinforcement Learning (DRL) framework developed as a commissioned project to provide a standardized, extensible platform for end-to-end learning in robotics.

The goal was to lower the entry barrier for students and research teams by enabling quick prototyping, reproducible training, and real-time introspection within ROS 2.

The framework integrates tightly with Stable-Baselines3 and supports plug-in-based environments, allowing new tasks to be added without modifying the RL core.

It comes with practical examples (CarRacing, LunarLander, CartPole) and extensive documentation covering observation/action space design, reward shaping, and hyperparameter tuning.

It also supports vectorized environments for parallel training and can introspect live ROS 2 topics during learning, enabling developers to visualize and debug agent behavior in real time, as shown below.

The framework emphasizes reproducibility, scalability, and transparency, making it an ideal foundation for both industrial and educational reinforcement-learning applications.

Key Features

- Unified training and evaluation pipeline for ROS 2 environments

- Plug-in architecture for easily registering new environments

- TensorBoard integration and live ROS 2 topic publishing during training

- Supports vectorized environments for high-throughput parallel learning

- Real-time introspection of published ROS 2 topics to monitor agent behavior

- GPU-accelerated PPO, SAC, and TD3 training support

- Ready-to-use environments (CartPole, LunarLander, CarRacing)

- Clear YAML-based configuration for all algorithms

Frameworks & Tools

Built and tested under ROS 2 Jazzy with CUDA-enabled PyTorch 2.2 for GPU training.

Links

Summary

A professionally developed ROS 2 reinforcement learning framework unifying algorithm design, training, and evaluation in robotics.

It bridges educational usability and research-grade scalability, empowering students, researchers, and engineers to prototype and deploy intelligent robotic behaviors efficiently.

3. Nonlinear Model Predictive Controller (nMPC) for Differential Drive Mobile Robot

A high-precision nonlinear control system for differential-drive robots that predicts future motion and optimizes control inputs over a finite horizon, enabling smooth constraint-aware trajectory tracking.

📘 Read full description

Description

A nonlinear Model Predictive Controller (nMPC) based local planner developed for a Differential Drive Mobile Robot (DDMR).

Unlike conventional reactive planners, the nMPC predicts future robot states through a kinematic model and optimizes control inputs over a finite horizon.

The controller minimizes a cost function while enforcing hard constraints on obstacle clearance, velocity, and input bounds.

The implementation leverages:

- CasADi for nonlinear optimization formulation

- IPOPT (Interior Point Optimizer) for efficient real-time solving

- Python / ROS Noetic for seamless runtime integration

The planner was benchmarked against standard local planners:

- Dynamic Window Approach (DWA)

- Timed Elastic Band (TEB)

Results demonstrate smoother, dynamically feasible trajectories, particularly in cluttered or narrow environments.

The entire system was simulated in Gazebo using a TurtleBot, with a GPU-enabled Docker container for reproducibility.

See also the Optimization Lab – PyTorch-based MPC (ROS 2) for a lightweight educational re-implementation using PyTorch instead of CasADi.

Frameworks & Libraries

Links

Summary

A high-performance nonlinear MPC for mobile robots using CasADi and IPOPT delivering smooth constraint-aware motion planning and serving as a foundation for subsequent PyTorch-based re-implementations in ROS 2.

4. Optimization Lab – PyTorch-Based MPC for Robotics

An educational ROS 2 lab demonstrating real-time control through gradient-based optimization with PyTorch, teaching how to implement MPC without external solvers.

📘 Read full description

Description

A PyTorch-based Model Predictive Control (MPC) framework developed as part of a university optimization lab, demonstrating how numerical optimization can be applied to control and planning problems in robotics.

Unlike the earlier CasADi-based MPC, this version leverages PyTorch autograd and optimizers (Adam/LBFGS) directly, without relying on external NLP solvers, to teach students how to formulate and solve control problems from first principles.

Developed as a commissioned project, the lab provides a complete ROS 2 Jazzy package (mpc_local_planner) that serves as both a tutorial and a working local planner.

It includes comprehensive documentation explaining:

- optimization in localization, planning, and control,

- MPC cost shaping and constraints,

- and real-time optimization loops using PyTorch tensors.

Key Highlights

- Created an Optimization Lab for the UAS Technikum Vienna robotics curriculum

- Developed a didactic ROS 2 Jazzy package:

mpc_local_planner - Implemented nMPC with PyTorch optimizers (Adam, LBFGS, RMSProp)

- Demonstrated gradient-based MPC without CasADi/IPOPT

- Integrated with Nav2 for trajectory tracking using costmap penalties

- Provided extensive documentation, code comments, and exercises

- Used in teaching labs to show real-time control, optimization, and differentiable robotics concepts

Frameworks & Tools

Built and tested under ROS 2 Jazzy using CUDA-enabled PyTorch 2.2.

Links

Summary

A university lab project showcasing optimization for robotics using PyTorch as a numerical solver.

It bridges classical control and differentiable programming by re-implementing nMPC entirely in PyTorch, illustrating how learning-based and optimization-based control can converge within modern ROS 2 pipelines.

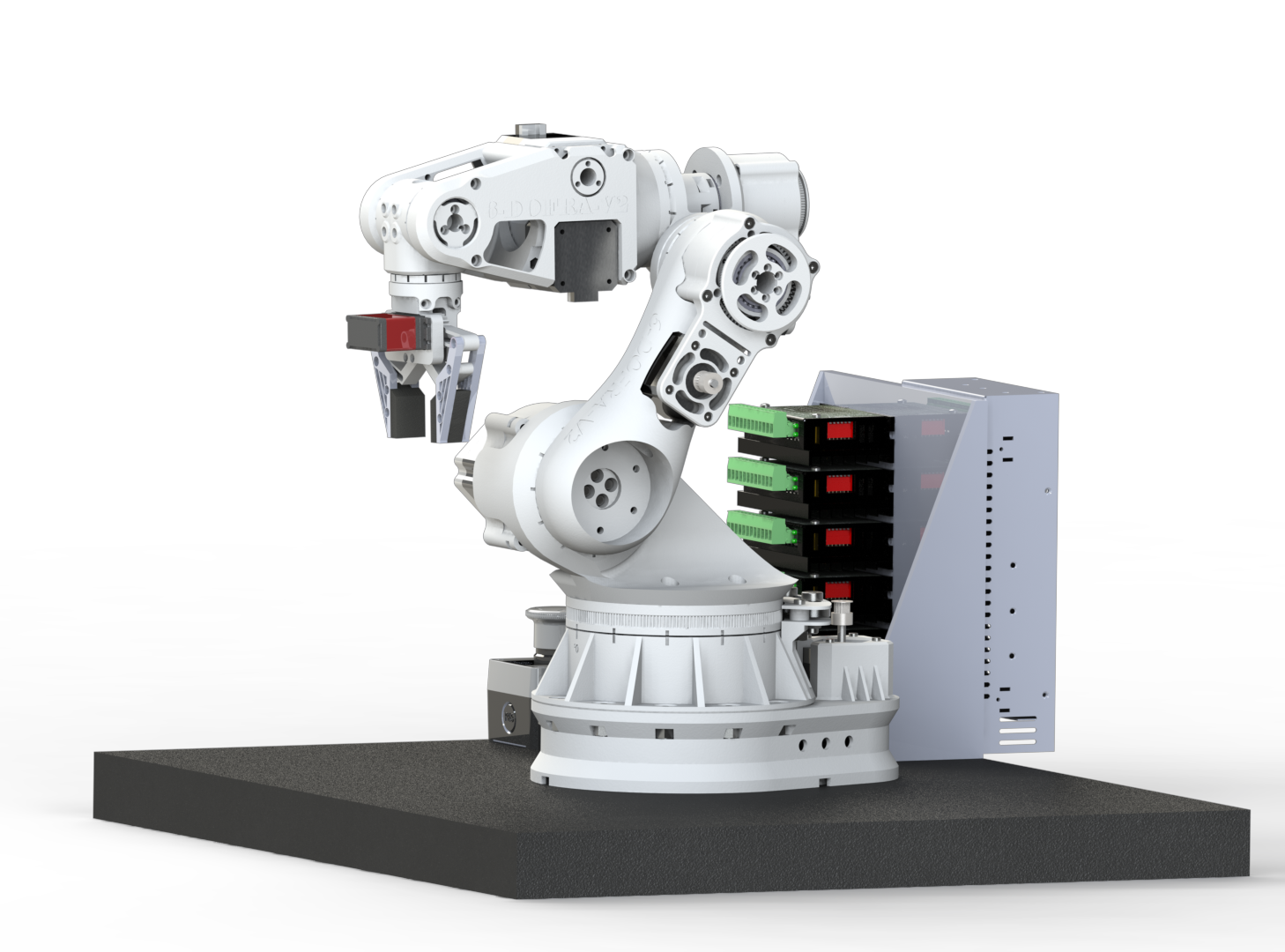

5. Automated Sensitivity Measurement System (AIRSKIN)

Designed and implemented an automated force–displacement measurement system using a UR10 robot, FT sensor, and RGB-D visualization, enabling reproducible AIRSKIN pad calibration.

📘 Read full description

Description

A collaborative project with Blue Danube Robotics – AIRSKIN developed at UAS Technikum Vienna to automate tactile pad sensitivity measurements.

The system measures the force and displacement required to trigger an AIRSKIN pad at defined grid points. From this, the spring constant and local sensitivity are derived to detect mechanical weak points and support further product development.

Built entirely with ROS Noetic and Docker, the system integrates:

- A UR10 industrial robot

- A force–torque (FT) sensor connected via the UR ROS bridge (TCP/IP)

- A custom ImGui C++ HMI for switching between Freedrive Mode and External Control Mode

Once all measurement points are defined, MoveIt executes a fully automated sequence. The system visualizes force vectors in RViz and overlays a 3D point cloud from an integrated RGB-D camera.

Frameworks & Libraries

Links

Summary

Automated robotic test bench for AIRSKIN pad calibration, measuring and visualizing tactile sensitivity through force–displacement mapping.

6. Monte Carlo Localization (Particle Filter) for Mobile Robots

Custom particle filter for 2D localization with optimized raycasting and resampling, achieving reliable pose estimation with only 100 particles.

📘 Read full description

Description

A Monte Carlo Localization (MCL) system, also known as a Particle Filter, implemented in C++ for Differential Drive Mobile Robots using ROS Noetic.

The algorithm estimates a robot’s pose on a known map by maintaining a set of weighted samples (“particles”), each representing a possible state hypothesis.

Key Highlights

- Reliable localization with only 100 particles, compared to typical 500–3000 AMCL particles.

- 80% randomized resampling per iteration for fast recovery from localization loss.

- Gazebo simulation using a TurtleBot in an apartment environment.

Frameworks & Libraries

Links

Summary

Robust and efficient Monte Carlo Localization achieving high accuracy with minimal particles through adaptive resampling, enabling fast and reliable robot pose estimation in dynamic indoor environments.

7. Design of a Cascaded Position and Velocity Controller for a Pan–Tilt Camera Tracking UAVs (Bachelor Thesis)

A cascaded control system enabling real-time UAV tracking with a high-speed pan–tilt camera, combining field-oriented motor control and Kalman-filtered trajectory prediction.

📘 Read full description

Description

A control system for tracking UAVs using a pan–tilt camera with cascaded position and velocity control.

Developed at Automation and Control Institute (TU Wien), the system enables accurate drone tracking in real time with predictive correction via Kalman filtering.

Frameworks & Libraries

Summary

Designed a cascaded position–velocity control system for a high-speed pan–tilt camera tracking UAVs, integrating FOC-driven PMSM motors and Kalman-filtered trajectory prediction for robust real-time tracking.

Personal Projects

Outside of my academic research and industrial work, I enjoy building and experimenting with robotic systems in my free time, exploring mechanical design, embedded control, and intelligent motion planning.

These projects allow me to prototype, test, and iterate on new ideas that blend classical robotics with modern AI-driven methods.

1. 6-DOF Robotic Arm – Design, Simulation & Control

Designed and built a 6-DOF robotic arm using stepper-driven harmonic-drive-inspired gear reductions, integrated with ROS MoveIt for collision-aware motion planning and synchronized sim-to-real execution.

📘 Read full description

Frameworks & Tools

Summary

A 6-DOF robotic arm designed and controlled entirely through open-source tools, combining 3D-printed mechanics, ROS MoveIt motion planning, and real-to-sim synchronization for flexible robotic manipulation.